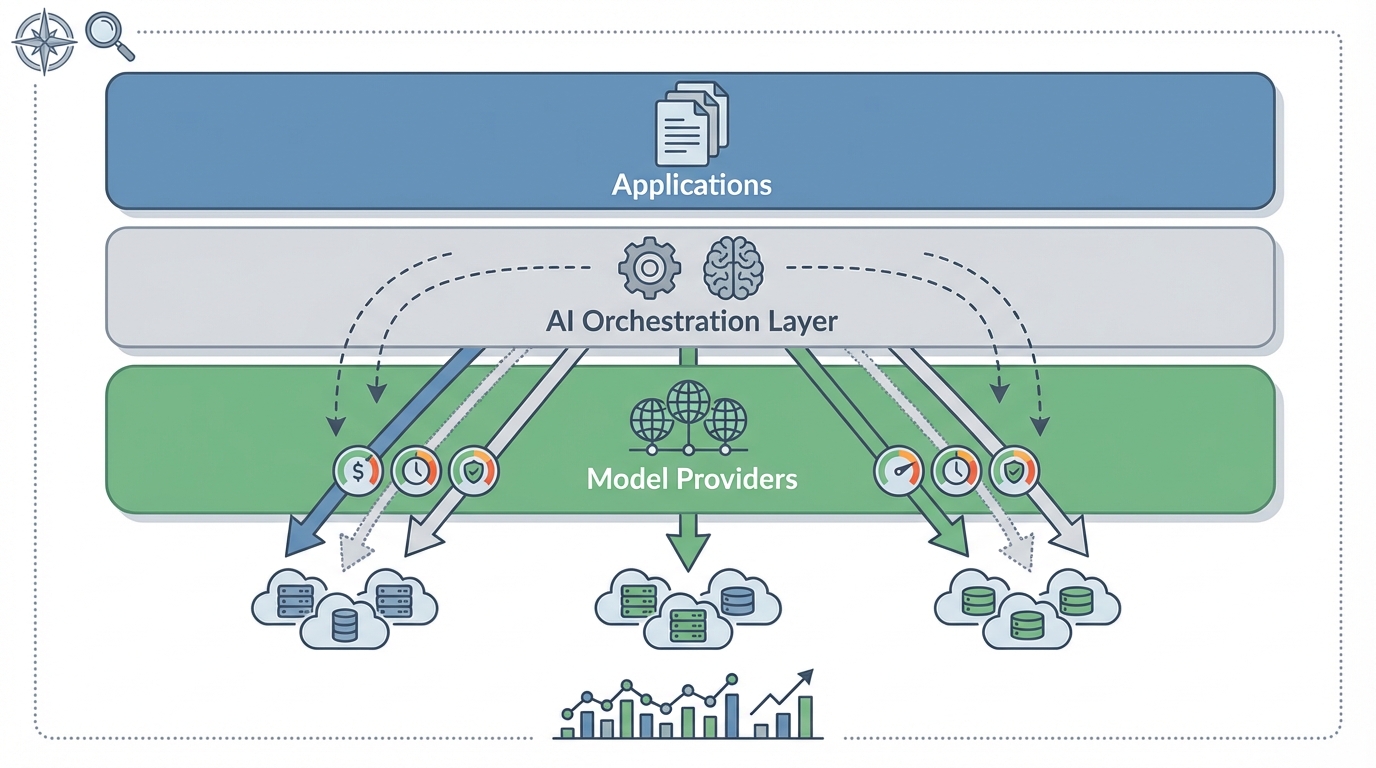

Thesis: As core AI inference becomes interchangeable, strategic value and negotiation power are migrating from single-vendor model providers to multi-model routing and governance layers that aggregate them.

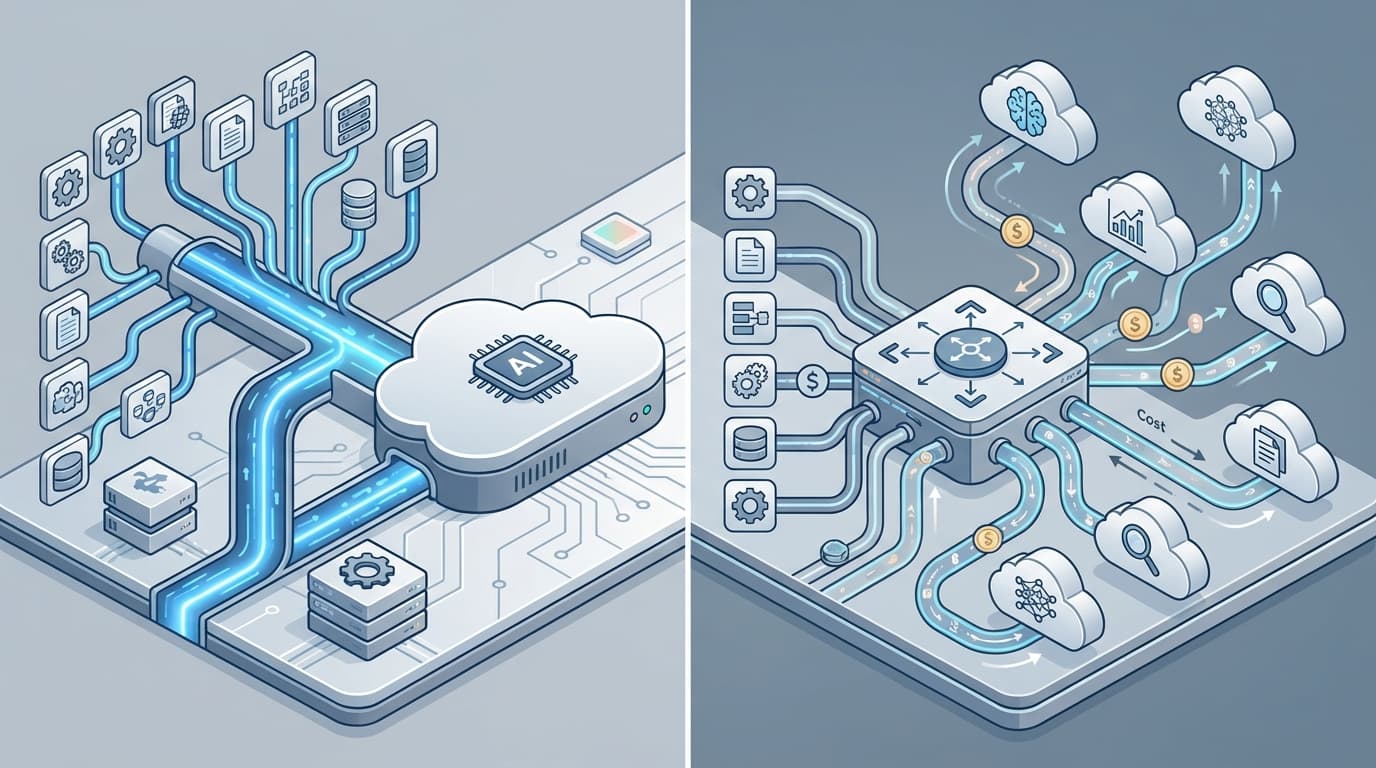

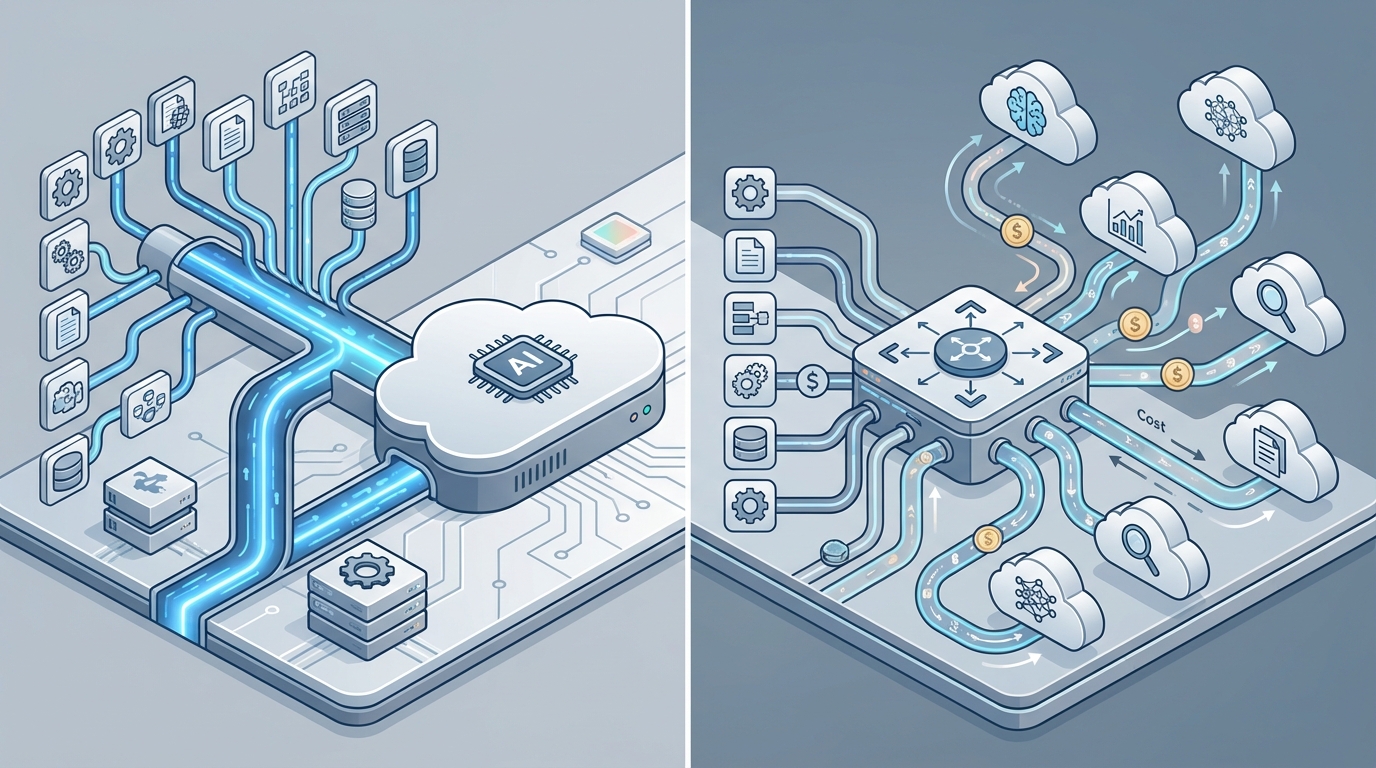

THE CASE: From Single-Vendor Stacks to Routing-First Architectures

In the span of 2023–2026, enterprise AI procurement evolved from “choose one model provider” to “choose a routing and governance layer.” Early large-scale deployments typically centered on a single ecosystem—OpenAI enterprise contracts, Azure OpenAI within Microsoft environments, or native Google and AWS offerings. Annual commitments of USD 50,000–500,000+, along with unbounded per-token spend, quickly led to sticker shock as usage scaled.

Two realities soon emerged. First, token consumption exploded: many organizations crossed 10 billion tokens per month within months of adopting AI for customer support, content workflows, and analytics. Second, procurement teams recognized a replay of 2010s cloud lock-in—this time with even steeper cost curves and less visibility.

In response, a wave of multi-model platforms and gateways arrived: Liminal AI for governance, Vercel AI Gateway and Cloudflare AI Gateway for traffic management and caching, Kong AI Gateway and LiteLLM for API-first routing, Bifrost (Maxim) for ultra-low-latency inference, and domain-specific orchestrators like Kore.ai and Gumloop.

Sales decks and analyst notes converged on similar metrics: according to Gartner, multi-model adopters have reported roughly 47% higher AI ROI versus single-vendor approaches, and vendor-published studies cite 40–60% lower effective platform cost compared to standalone enterprise agreements. Benchmarks further underscored the shift: Vercel’s routing layer adds sub-20 ms overhead, Bifrost advertises an 11 µs latency impact, and Cloudflare’s edge caching can eliminate many redundant model calls.

THE PATTERN: Aggregation Layers Arbitrate Commoditized Intelligence

The multi-model versus single-vendor debate is less about developer convenience than about power distribution when a capability becomes a commodity. As core inference commoditizes, value migrates away from model providers toward aggregation and control points that sit above them.

This dynamic has recurred across technology domains:

- Content delivery networks became critical once hosting and bandwidth commoditized.

- Multi-cloud management tools emerged when compute resources converged on price-performance parity.

- Payment aggregators captured margins by unifying diverse processors under standardized interfaces.

AI is entering a similar phase: interchangeable models, transparent per-token economics, and unified APIs are shifting the locus of differentiation to routing, caching, and governance layers.

Key Drivers of the Shift

- Substitutable models: GPT-4, Claude, Gemini, Llama variants, and specialized offerings are often interchangeable across many enterprise tasks.

- Visible economics: enterprises can benchmark cost-per-outcome across providers, pressuring vendors to align rates with marginal costs.

- Unified abstractions: routing layers normalize APIs, enabling seamless workload migration between models.

- Centralized governance: logging, policy enforcement, and compliance naturally reside in a gateway that mediates all traffic.

Once a routing layer is in place, an enterprise effectively turns model providers into a set of interchangeable suppliers. The resulting competitive supply framework underpins the reported 40–60% cost savings and ROI improvements—effects emerging from structural shifts rather than isolated platform optimizations.

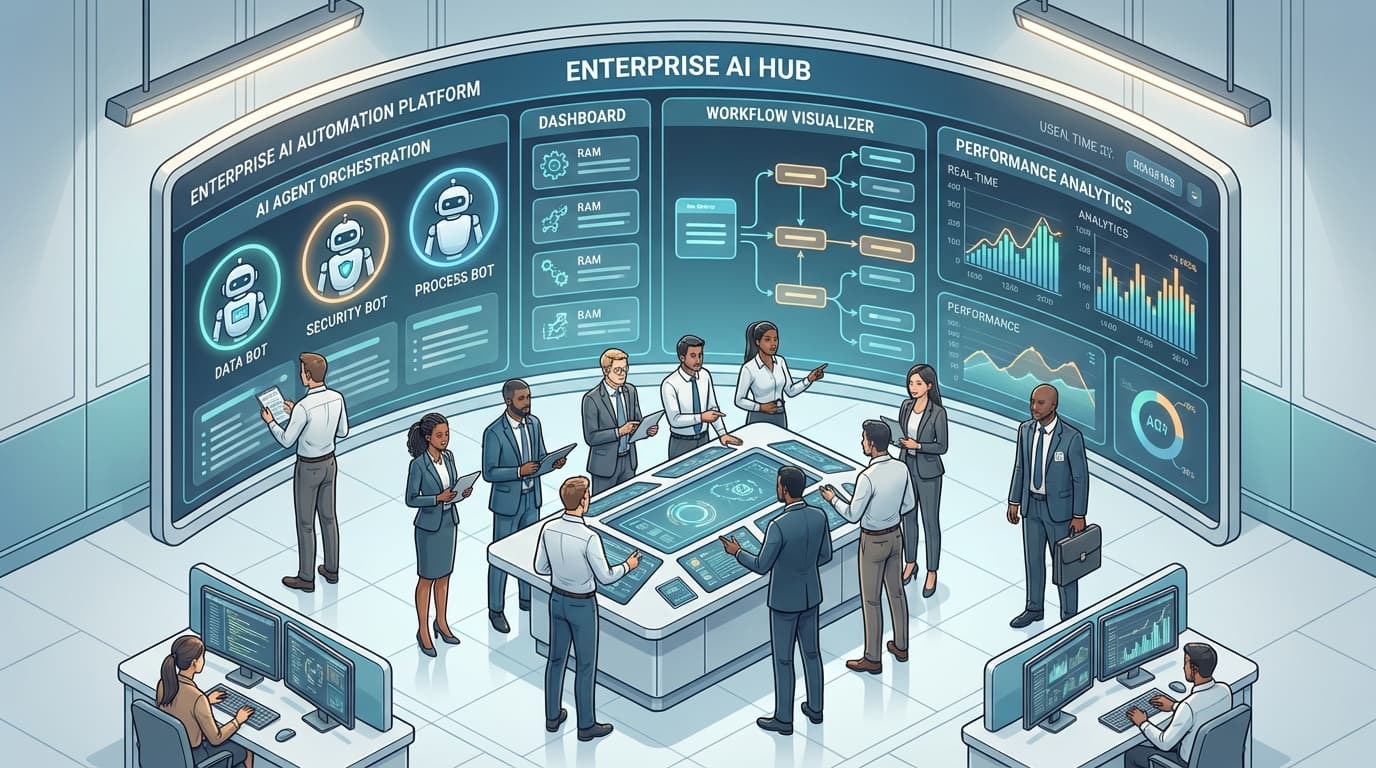

THE MECHANICS: Feedback Loops and Data Advantages

As traffic centralizes through multi-model platforms, reinforcing loops accelerate their market position:

- Increased volume → richer cross-model performance and cost telemetry.

- Richer data → more precise routing policies and cost optimizations.

- Better optimization → clearer savings and reliability for enterprises.

- Visible benefits → wider adoption of the routing layer.

Over time, these platforms become de facto market makers in enterprise AI. They sit between fragmented model supply and concentrated enterprise demand, using standardized interfaces to arbitrate quality, cost, and compliance at scale. Model vendors, in contrast, see only usage within their silos and lack cross-vendor benchmarks needed for holistic optimization.

INCENTIVES AND CONSTRAINTS ACROSS THE ECOSYSTEM

Enterprise Evolution: Simplicity to Optionality

Initial AI pilots favor single-vendor simplicity: one security review, one procurement track, deep cloud integrations, and early access to leading-edge capabilities. But as token volumes exceed ~10 billion per month, enterprises confront:

- Cost exposure: single-vendor pricing often 20–40% above a mixed-model portfolio.

- Capability gaps: modality, language, or safety tool mismatches that are costly to remedy.

- Operational risk: regional outages or policy changes that simultaneously impact all workloads.

- Governance sprawl: fragmented visibility when teams experiment across multiple vendors.

Routing layers address these constraints by normalizing traffic, consolidating policies, and enabling selective substitution—advantages that often repay integration effort within 6–12 months, according to vendor-reported customer case studies.

Model Provider Responses: Bundling, Features, and Partnerships

Model vendors face a platform conundrum. They seek exclusive enterprise relationships via volume discounts, compute bundles, and proprietary features that resist abstraction. Simultaneously, they must integrate with popular routing layers (e.g., Kong, Vercel, Cloudflare, Liminal) to avoid exclusion from enterprise portfolios.

- Bundled deals & volume tiers aim to preserve single-vendor lock-in.

- Unique model capabilities (e.g., specialized reasoning engines) serve as differentiation points beyond generic text completion.

- Gateway integrations provide easier distribution even as vendors pursue direct contracts.

As enterprise traffic shifts through aggregators, per-token rates tend toward marginal cost, with profit migrating to specialized services and frontier models that resist easy substitution.

Platform Engineering: Abstraction and Latency Mastery

Technical foundations of multi-model platforms include:

- Unified API layers: transforming and routing calls across diverse model endpoints.

- Intelligent policies: selecting models based on cost, latency, capability, data residency, and compliance.

- Caching/deduplication: edge caches that cut redundant requests and token fees.

- Governance: audit trails, redaction, RBAC, and residency controls often built atop Model Context Protocol (MCP) or equivalents.

- Latency optimizations: as vendor reports show, sub-20 ms overhead for Vercel and 11 µs for Bifrost make routing near-invisible in critical paths.

EXCEPTIONS AND LIMITS

While multi-model routing layers excel for many enterprise workloads, notable edge cases complicate the picture. Frontier or highly specialized models—particularly those under active R&D—often resist abstraction due to hardware dependencies, evolving APIs, or tight integration with proprietary toolchains. Regulated industries with stringent data residency or audit requirements may favor on-prem or closed-loop architectures. In such scenarios, single-vendor or bespoke solutions can retain strategic value, particularly where deep vendor-native features or certified compliance controls outweigh the benefits of interchangeable inference.

CONCLUSION: Power Shifts Toward Aggregators

As inference commoditizes, the real battleground moves above the model layer. Routing and governance platforms, by aggregating supply and arbitraging cost-performance trade-offs, are capturing negotiation leverage, innovation rents, and data advantages. Enterprises centralizing AI traffic through these layers tend to attain sharper pricing, unified policy control, and compound learning from cross-vendor telemetry. Model vendors respond with bundling, feature lock-ins, and gateway partnerships, but the structural momentum favors aggregation—echoing prior shifts in CDN, cloud, and payments markets.