Integration Gravity And The Flexibility Tax In Enterprise AI

The Case

In late 2025, a global financial services firm with 40,000 employees faced the now-familiar AI platform fork in the road. The company had spent a decade standardizing on Microsoft 365, Azure, and Teams. Identity ran through Entra ID, documents lived in SharePoint and OneDrive, and years of security and compliance work were encoded in Microsoft’s stack.

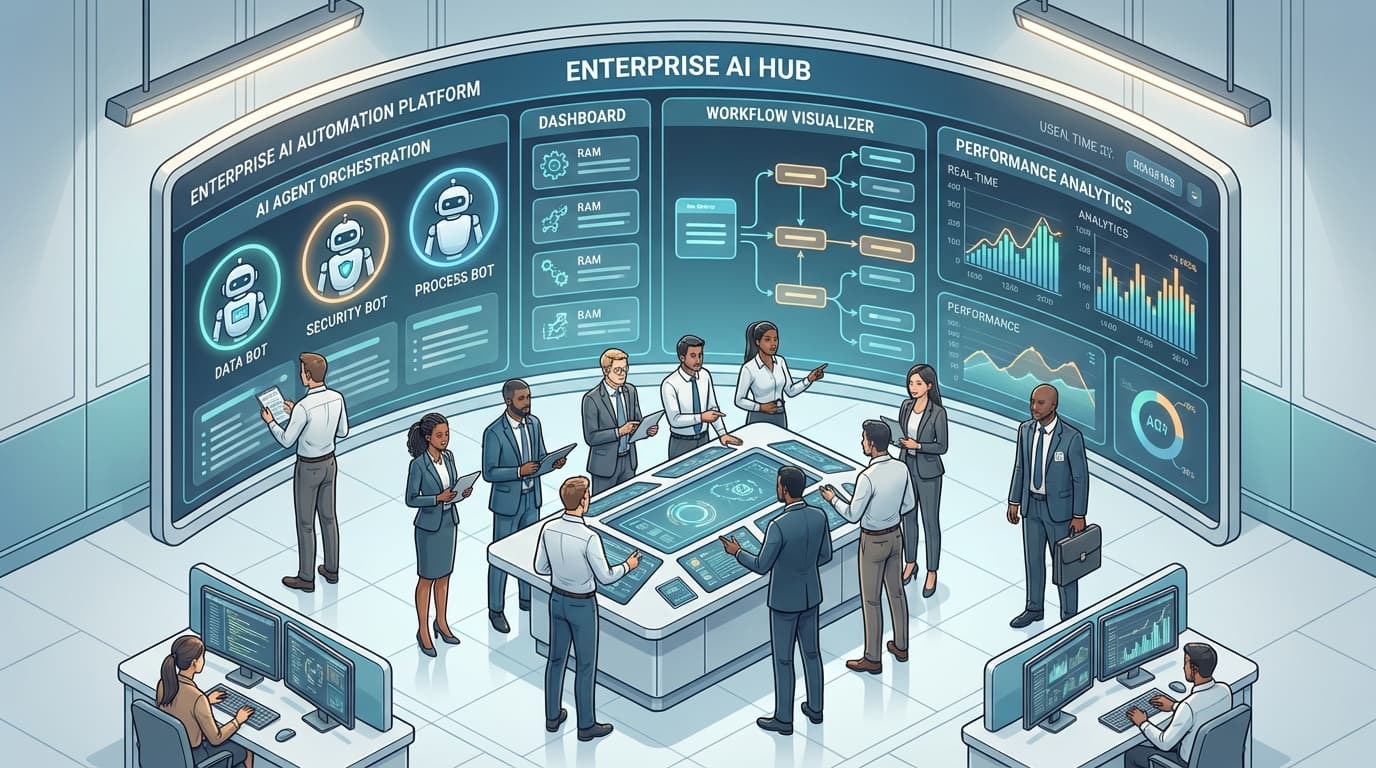

The AI architecture team framed two concrete options. Path A was to double down on a single-provider ecosystem: Microsoft Power Automate (priced at roughly $15 per user per month) for workflows, Copilot Studio for agent creation, and Azure OpenAI as the default model layer. Internal estimates suggested an initial deployment to a few thousand knowledge workers in 2-3 weeks, largely because procurement, security, and data residency questions had been solved years earlier.

Path B was a multi-model approach built on top of the existing cloud estate. The team proposed Kore.ai as an orchestration layer – attracted by its “model- and cloud-agnostic architecture”, 300+ pre-built integrations, and 250+ out-of-the-box agents – combined with Vellum AI as a “unified AI automation platform” for evaluation, versioning, and observability across multiple models. For complex document workflows, they added Sema4.ai and its SAFE agent framework (Secure, Accurate, Fast, Extensible) to handle unstructured data and business-user–built automations.

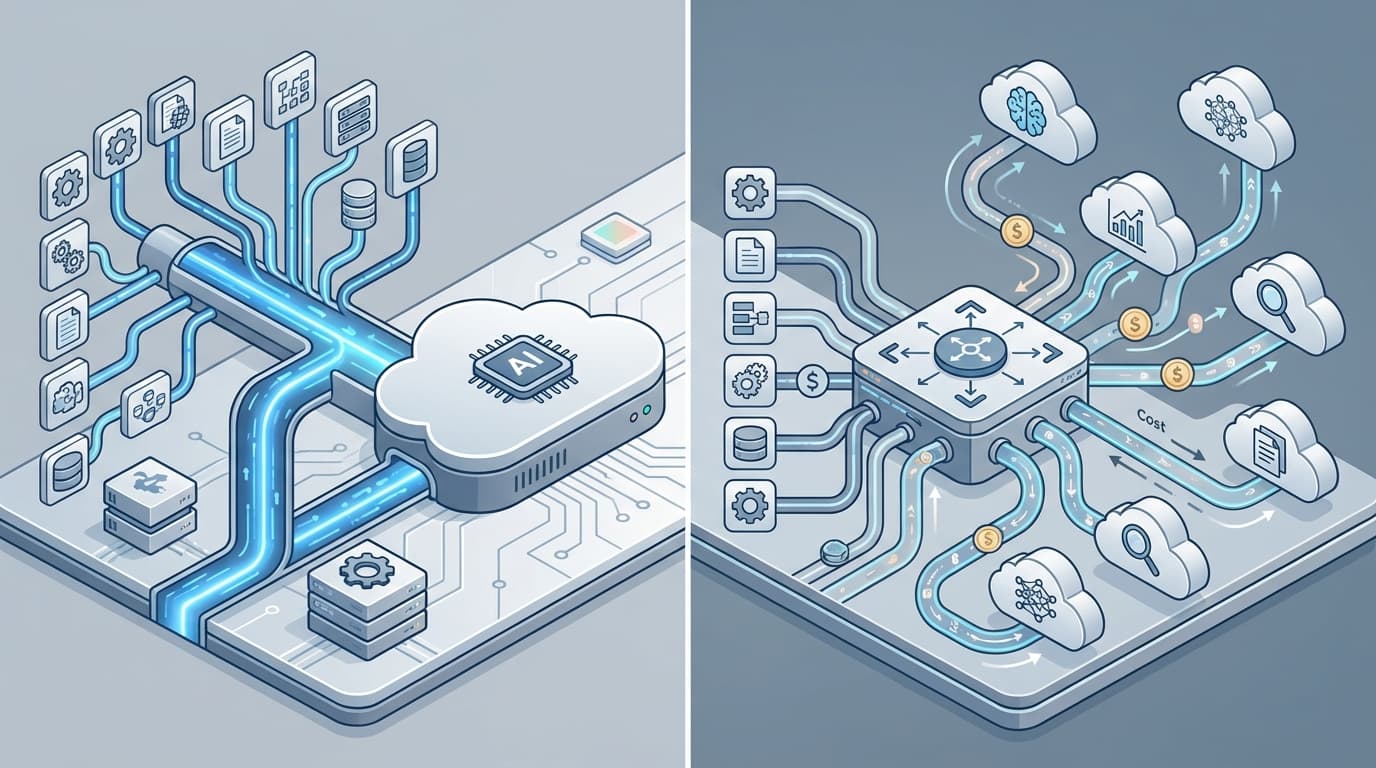

Technically, Path B looked superior on paper: freedom to mix GPT-4–class models with cheaper providers for routine tasks, systematic comparison of cost, latency, and accuracy via Vellum’s evaluation tools, and the ability to run Kore.ai agents across multiple clouds and business domains. But security flagged that three net-new vendors would require due diligence. Procurement highlighted the complexity of request-based, session-based, and per-seat pricing cards. The project manager adjusted the deployment estimate: 4–8 weeks before the first production workflows could go live, consistent with timelines seen in other multi-model or cloud-native platform rollouts.

In the end, the firm chose a hybrid compromise. Core productivity scenarios (email triage, document drafting, basic workflow automation) went to the Microsoft stack, where integration was closest to “plug and play”. A smaller, more technical group piloted Kore.ai, Vellum AI, and Sema4.ai for customer support and document-heavy risk workflows. The decision looked tactical, but it revealed a deeper structural pattern: integration gravity pulled the organization toward a single provider, while the multi-model strategy carried a flexibility tax that only a few high-value domains could justify.

The Pattern

Variations of this case are playing out across large enterprises in 2026. Underneath the branding and feature lists, a consistent structural dynamic is emerging: integration gravity favors single-provider AI platforms, while a flexibility tax constrains how far multi-model strategies can spread inside complex organizations.

Integration gravity is the cumulative pull of existing decisions. If identity, data, collaboration, and security are already standardized on one major cloud – typically Microsoft, AWS, or Google Cloud – then that provider’s AI platform starts with a decade’s worth of embedded advantage. Microsoft’s Copilot ecosystem plugs directly into Outlook, Word, Excel, Teams, and Power Automate; AWS Bedrock AgentCore lives next to Lambda, S3, and DynamoDB; Google’s Vertex AI sits inside the same control plane as BigQuery and Google Workspace. Each turns familiarity, pre-approved contracts, and baked-in compliance into a structural moat.

Multi-model platforms push hard in the other direction. Kore.ai, Vellum AI, Sema4.ai, and others promise model- and cloud-agnostic control: pick the right model for each job, route workloads to the cheapest or fastest provider, and avoid being at the mercy of a single vendor’s roadmap or price changes. Their selling points are flexibility, optimization, and vendor independence rather than ecosystem depth.

But this flexibility is not free. It arrives bundled with overhead in governance, observability, and integration. Someone inside the enterprise must decide when to use a premium model versus a budget one, how to allocate costs across departments, how to measure model performance beyond anecdote, and how to pass security and compliance review for another new vendor. That overhead is the flexibility tax.

The pattern becomes clearer when looking at deployment timelines and ownership. Embedded single-provider rollouts – such as enabling additional Copilot features or rolling out Power Automate templates – can often reach initial production in about two weeks because they reuse identity, networking, and data-access decisions. By contrast, multi-model or cloud-agnostic platforms like Kore.ai or Vellum AI typically require 4–8 weeks, as enterprises establish new integration patterns, evaluation practices, and cost controls.

This does not mean multi-model strategies fail. Instead, their footprint concentrates where the flexibility tax can be justified. Enterprises use multi-model platforms for customer contact centers, high-volume document processing, or regulated decisioning – domains where a few percentage points of accuracy, lower latency, or better explainability have outsized financial or compliance impact. Everywhere else, integration gravity nudges teams toward whatever AI is bundled with their primary cloud and collaboration suite.

Even vendors reflect this pattern. IBM watsonx, for example, straddles both worlds, combining IBM’s own models, open models, and tight integration with IBM infrastructure. Kore.ai emphasizes its “model- and cloud-agnostic architecture” yet ships hundreds of deep integrations into the same ecosystems it nominally decouples from. Vellum AI positions itself as a “unified AI automation platform” precisely because the proliferation of models needs a new organizing layer. Single-provider stacks are pulled toward optionality; multi-model platforms are pulled toward opinionated integration. The pattern is convergence around control planes, not around any specific model.

The Mechanics

Under the surface, three reinforcing feedback loops drive integration gravity and the flexibility tax: organizational incentives, operational constraints, and vendor strategies.

Organizational incentives tilt toward fewer platforms. CIOs and CISOs are rewarded for reliability, regulatory compliance, and predictable spend, not for squeezing the last 5% of accuracy out of an LLM benchmark. A single-provider AI stack means one master contract to negotiate, one set of data residency assurances, one identity story, and one pane of glass for audit logging. When Microsoft Power Automate can be added to existing enterprise agreements at around $15 per user per month, or when AWS Bedrock usage sits on the same bill as EC2, the cost of thinking about alternatives rises.

By contrast, multi-model platforms fragment responsibility. Kore.ai’s flexible mix of request-based, session-based, per-seat, and pay-as-you-go pricing looks attractive to a product team optimizing a single use case, but it forces finance and chargeback teams to revisit cost-allocation models. Vellum AI’s strength – fine-grained evaluation of cost, latency, and accuracy – is only realized if someone is mandated to design and maintain those evaluation regimes. Sema4.ai empowers business users to create agents through natural language interfaces and runbooks, but then governance must prevent an explosion of semi-managed automations.

Operational constraints amplify these incentives. Most enterprises simply lack mature MLOps and model evaluation practices. They have hardened processes for promoting application code, but not for promoting prompts, agents, or model choices. Single-provider ecosystems approximate safety by bundling opinionated defaults: identity boundaries, data-loss prevention policies, RBAC, and logging are already wired in. Power Automate and Copilot Studio, for example, integrate natively with Entra ID, DLP policies, and existing RBAC configurations; AWS Bedrock AgentCore inherits IAM, encryption, and CloudTrail logging.

Multi-model platforms flip the default. Kore.ai’s multi-agent orchestration and agentic RAG capabilities assume the enterprise will define which systems each agent can touch, what tools it may invoke, and what retention rules apply to retrieved data. Vellum’s observability requires teams to instrument prompts and workflows, collect ground-truth labels where possible, and decide which metrics matter. Sema4.ai’s SAFE agent framework (Secure, Accurate, Fast, Extensible) makes these dimensions explicit but does not remove the need for organizational decisions about what “Secure” or “Accurate” means in each business process.

Vendor strategies close the loop. The hyperscalers are deliberately turning their AI offerings into the default choices inside their ecosystems. Microsoft binds Copilot deeply into Office documents and Teams chats; AWS Bedrock AgentCore is architected to feel like just another AWS-native service as it orchestrates agents across Lambda, Step Functions, and DynamoDB; Google Cloud’s Vertex AI builds low-code pipelines that align with existing data engineering practices on BigQuery and Dataflow. Each step makes deviation from the default more expensive relative to the perceived risk.

Meanwhile, multi-model vendors respond by becoming more platform-like themselves. Kore.ai does not just offer connectors; with 300+ pre-built integrations and 250+ pre-built agents spanning CRM, ERP, and communication tools, it is becoming an overlay orchestration fabric across the enterprise. Vellum AI leans into evaluation, version control, and rollout management, effectively acting as an MLOps layer for prompts and agents. Sema4.ai, with its Document Intelligence and Control Room capabilities, positions itself as an end-to-end environment for business-user–authored agents, not merely a “model switcher”.

The feedback loops work like this:

- Existing cloud and collaboration standards push the first wave of AI adoption into single-provider stacks.

- As usage grows, gaps appear in specialized domains (contact centers, document-intensive workflows, regulated decisioning) where default models underperform or costs spike.

- Multi-model platforms are adopted surgically to address these high-leverage gaps, justified despite the flexibility tax because the business value is concentrated.

- Over time, these multi-model overlays gain their own gravity – with their own integrations, governance processes, and user communities – and begin to resemble secondary control planes alongside the primary cloud stack.

Crucially, what determines success is less the choice between single-provider or multi-model, and more the enterprise’s readiness for governance, observability, and evaluation. Organizations with disciplined experimentation pipelines, clear chargeback models, and strong data engineering practices can extract real advantage from platforms like Kore.ai, Vellum AI, and Sema4.ai. Those without tend to default to whatever their main cloud provider offers and accept the resulting lock-in as the cost of moving fast without breaking governance.

The Implications

Once the integration-gravity and flexibility-tax pattern is visible, several outcomes become predictable.

First, AI platform decisions in 2026 and beyond will mostly be path-dependent. Enterprises that already standardized on Microsoft will increasingly resemble “Copilot-first” shops, with Power Automate and Copilot Studio as the default automation fabric. AWS-centric organizations will see Bedrock AgentCore quietly become the default orchestration layer. Google Cloud’s customers will deepen Vertex AI usage. In all three cases, multi-model tools will arrive later and in narrower bands, focused on domains where the hyperscaler defaults are too expensive, too inflexible, or too weak on domain accuracy.

Second, multi-model platforms will be judged less on their raw model menus and more on their governance and evaluation capabilities. The winning value propositions will look like Vellum AI’s emphasis on rigorous evaluation and observability, Kore.ai’s enterprise-wide orchestration of CX, EX, and process agents, or Sema4.ai’s SAFE agent framework and Control Room for business users. In other words, they will compete as governance and control planes, not just as routers between models.

Third, headlines about AI cost overruns and “model sprawl” will disproportionately come from organizations that leapt into multi-model architectures without strengthening observability and cost-allocation practices. Conversely, stories about sudden renegotiations or dependency risks will cluster around enterprises that went all-in on a single provider without preserving optionality in their interfaces and data architectures.

Finally, regulators and auditors will implicitly reinforce this pattern. It is easier to assess risk on a smaller number of deeply integrated platforms than across an ever-changing catalog of models. That dynamic will strengthen the hand of both hyperscalers and multi-model platforms that make evaluation, logging, and explainability first-class features.

For enterprise leaders, the structural lesson is not that one camp will “win”. Instead, it is that AI platforms will crystallize around control planes aligned with existing infrastructure choices, and that any deviation carries an explicit flexibility tax that must be justified by concentrated business value. Understanding this dynamic turns AI strategy from a vendor beauty contest into a clearer exercise in mapping where integration gravity should be obeyed, and where it is worth paying to escape it.