Executive summary – what changed and why it matters

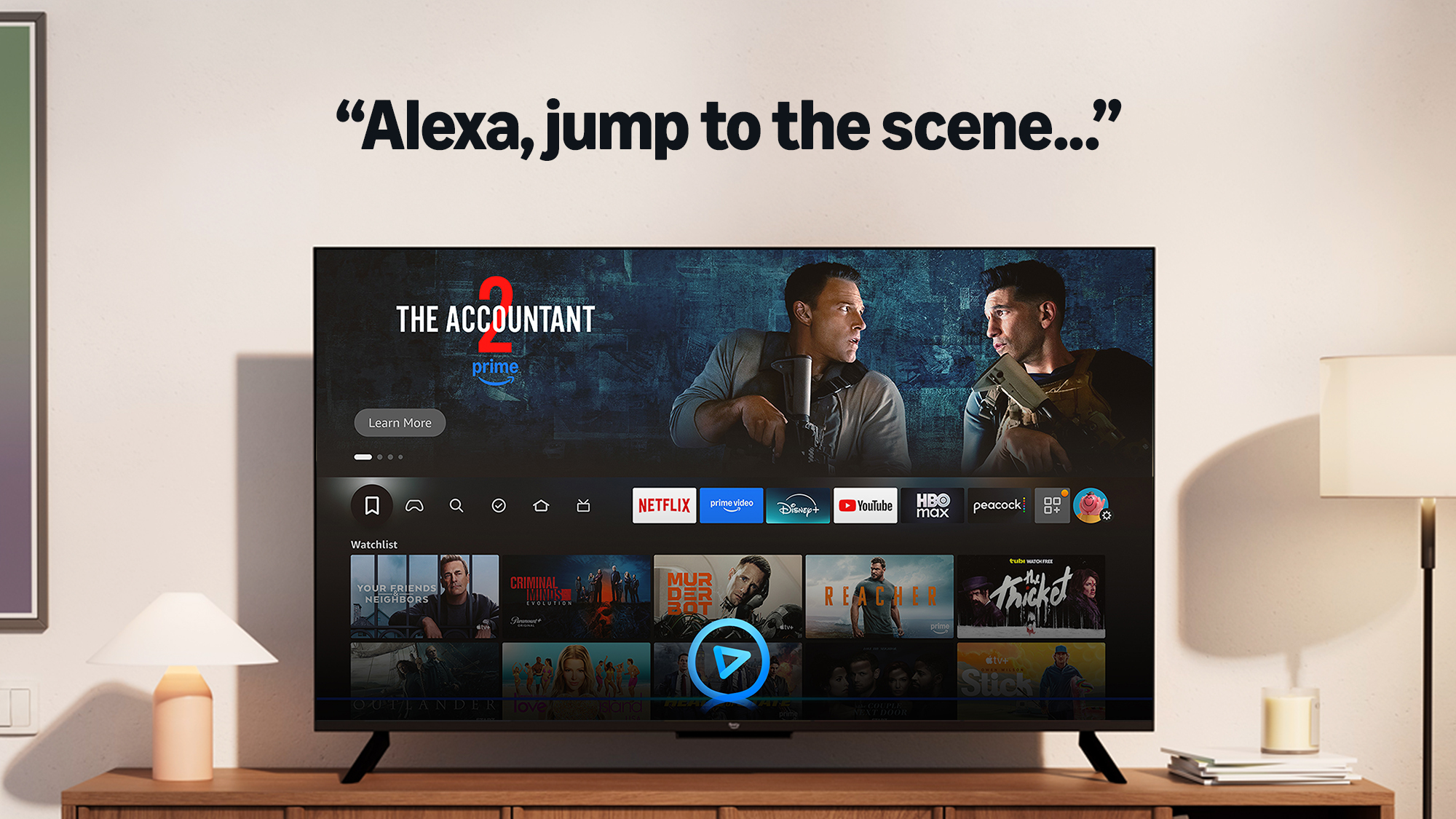

Amazon launched an AI-powered Alexa+ “scene jump” on Fire TV that lets viewers jump to specific Prime Video movie moments by describing them in natural language. Technically, it combines visual understanding, caption analysis and large language models (Amazon Nova and Anthropic Claude via Amazon Bedrock) against a scene index of tens of thousands of timestamps; median end‑to‑end latency Amazon reports is under 2 seconds. For product leaders, this shifts content discovery from metadata search to conversational, multimodal retrieval – but it also introduces measurable cost, licensing, latency and governance tradeoffs.

Key takeaways

- Substantive change: natural‑language scene retrieval across thousands of Prime Video movies, driven by LLMs and visual/caption indexing.

- Quantified impact: indexed “tens of thousands” of scenes; typical scene‑jump latency under 2 seconds; Bedrock inference billed (example figure) ~ $0.0001/token; S3/CloudFront and Transcribe add storage/transfer and STT costs.

- Limitations: currently supports movies that are in Prime membership or purchased/rented; TV shows are planned later.

- Risk vector: privacy (voice transcripts and query logs), hallucination or incorrect scene matches, and licensing/content‑availability edge cases.

Breaking down the capability

At runtime the pipeline is: user voice → speech‑to‑text → LLM intent/metadata extraction → scene index lookup → playback at timestamp. Amazon uses Bedrock to host multiple models (Nova for conversational understanding, Anthropic Claude for reasoning/verification). The scene index is built from captions, X‑Ray metadata and visual analysis; Amazon says it indexed tens of thousands of scenes across thousands of movies. In practice, that means queries that reference quotes, props, characters or short plot beats can be resolved without the user naming the title.

Operational numbers provided or implied: sub‑2s median latency (speech + LLM + DB lookup + video seek), millions of queries/day scalability via auto‑scaling, and incremental costs across Bedrock inference (~$0.0001/token as a reference), S3 storage ($0.023/GB/mo), CloudFront egress (~$0.085/GB) and Transcribe (~$0.024/min).

Why now

Multiple factors converge: LLMs are mature enough for conversational intent extraction, Bedrock offers multi‑model orchestration and low‑latency endpoints, and streaming services have mature caption/X‑Ray metadata to index. Consumer expectations for voice and instant gratification are rising, so this feature reduces friction for content retrieval and potentially increases engagement and incremental purchases or rentals.

Operational implications and costs

Adopting a similar feature requires three practical investments: build or license a high‑quality scene index (time‑coded captions, character and quote extraction), provision low‑latency inference (edge or regional Bedrock endpoints), and integrate with the content platform to seek at timestamps and enforce purchase/DRM checks. Expect nontrivial recurring costs in inference and egress and a need for caching hot scenes to keep latency and per‑query costs manageable.

Risks, governance and compliance

Key governance points: voice and query logs are sensitive – privacy teams must define retention, anonymization and opt‑out flows. LLMs can hallucinate: a plausible‑sounding but wrong scene should trigger verification UI (confirm before jumping) or a confidence score threshold. Licensing matters — the feature must check entitlement (Prime, purchased/rented) at retrieval time. Finally, model provenance and region residency matter for regulated markets; Bedrock regional availability should be checked.

Competitive context

Compared with rivals, Amazon’s advantage is tight integration between Alexa, Fire TV, Prime Video metadata and Bedrock multi‑model hosting. Google Assistant and Apple Siri support voice search but don’t yet match multimodal scene retrieval depth; Roku and others remain metadata‑limited. For enterprises building similar features, the choice is either integrate with cloud LLM services and proprietary metadata (fast but vendor‑dependent) or build an open pipeline with self‑hosted models and custom indexing (more control, higher ops burden).

Recommendations — what teams should do now

- Product: pilot with a constrained catalog (popular movies) to measure hit rate, user intent ambiguity and business metrics (engagement, rentals). Require confirm step if model confidence < threshold.

- Engineering/SRE: instrument per‑stage latency and cost; cache top 10% of scenes; evaluate edge inference (AWS Wavelength or regional Bedrock endpoints) to keep median latency <2s.

- Legal/Content Licensing: verify entitlement checks for on‑demand vs subscription content and get clear terms for query logging and model training data use.

- Privacy/Safety: define retention, opt‑outs, and a plan to surface confidence and correction flows when the LLM misidentifies scenes.

Bottom line: Amazon’s scene jump is a concrete step toward conversational, multimodal content retrieval that can materially improve discoverability and engagement. But deployers must measure latency/costs, lock down licensing and privacy controls, and design UIs that surface confidence and allow user correction before relying on this as a primary discovery mechanism.