What Changed and Why It Matters

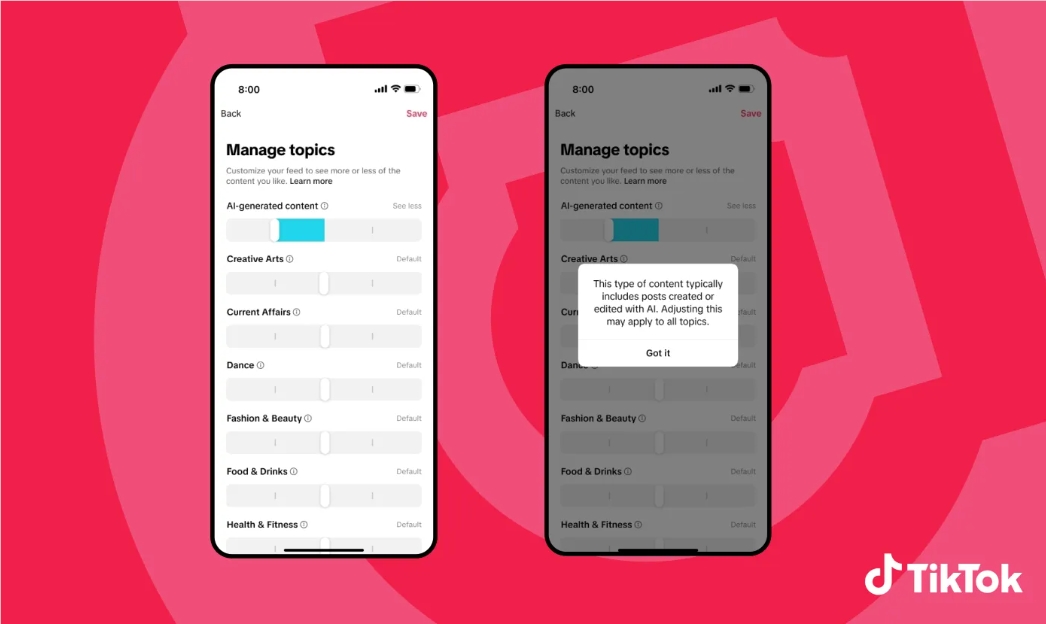

TikTok is rolling out an AI-generated content (AIGC) slider inside Manage Topics so users can dial up or down how much AI content appears in their For You feed. It’s also testing “invisible watermarks” to more reliably label AI-made media alongside C2PA Content Credentials, and launching a $2 million AI literacy fund. For operators, this introduces a new user signal that could reshape distribution for AI-heavy creators and a fresh layer of provenance tech that affects compliance, brand safety, and moderation workflow.

Key Takeaways

- The AIGC slider adds a user-controlled ranking signal; it will likely influence reach for AI-forward content without removing it entirely.

- Invisible watermarks create a TikTok-readable provenance layer that’s harder to strip than standard metadata, but risks interoperability trade-offs.

- Labeling coverage improves for content created with TikTok tools or uploaded with C2PA credentials; unlabeled third-party AI content remains a gap.

- $2M for AI literacy is positive but modest given TikTok’s scale; expect education campaigns, not systemic risk mitigation by itself.

- Enterprises should update disclosure policies, adopt C2PA in creative pipelines, and test how the slider impacts performance by audience segment.

Breaking Down the Announcement

The AIGC control lives under Settings → Content Preferences → Manage Topics, alongside categories like Dance, Sports, and Food & Drinks. It lets users adjust, not eliminate, AI content exposure-think ranking weights, not a hard filter. TikTok says the change will roll out over the coming weeks, so expect staggered availability and iterative tuning.

On provenance, TikTok already reads and writes C2PA Content Credentials, which embed metadata signaling AI generation. The company will add invisible watermarks to AI assets produced with TikTok tools (e.g., AI Editor Pro) and to uploads that arrive with C2PA credentials-creating a second, platform-detectable tag intended to persist through common edits. Because only TikTok can read this watermark, it improves internal detection and labeling but doesn’t inherently help other platforms verify the same content.

Finally, the $2M literacy fund will back experts and nonprofits (e.g., Girls Who Code) to create materials that teach AI literacy and safety. Useful for awareness and creator education, but not a substitute for robust detection, enforcement, and user controls.

Why This Matters Now

AI-only feeds are proliferating: Meta launched Vibes for short AI videos and OpenAI introduced Sora as a social platform for creating and sharing AI-generated videos. TikTok’s feed is already saturated with realistic AI clips-from historical explainers to celebrity imagery—which raises brand safety, misinformation, and user fatigue risks. Regulators are also escalating expectations: large platforms face pressure to label synthetic media and give users meaningful controls under regimes like the EU’s Digital Services Act and deepfake disclosure requirements emerging globally. The slider and watermarking are TikTok’s bid to show it’s advancing user choice and provenance in line with those pressures.

What Changes for Operators

Expect the slider to act as a new distribution input. If a material share of your audience dials AI content down, AI-heavy posts may see lower watch time and reach, particularly in cold-start recommendations. Conversely, audiences opting “more AI” could increase lift for certain formats (e.g., stylized explainers, synthetic product showcases). Teams should plan controlled experiments comparing AI vs. non‑AI treatments across cohorts and track changes to completion rate, shares, and follow intent.

For trust and safety teams, invisible watermarks plus C2PA improve detection coverage for assets created or ingested with credentials. But the system still won’t catch AI content produced off-platform without credentials or robust watermarks. Human review, behavioral signals, and user reports remain essential, especially for high-risk topics (politics, health, finance).

For compliance leads, the combination of user controls, labeling, and education helps demonstrate risk mitigation. However, auditability hinges on measurable accuracy: false negatives (missed AI) and false positives (mislabeling human-made content) can both create legal and reputational exposure. You will need metrics.

Risks and Caveats

- Watermark robustness: “Invisible” marks often degrade under recompression, resizing, or re-encoding. Claims of resilience need validation across common creator workflows.

- Interoperability: A TikTok-only watermark boosts internal detection but doesn’t help other platforms, potentially fragmenting provenance signals across the ecosystem.

- Coverage gaps: Content generated with third-party tools and stripped of metadata may still evade labeling. Bad actors can deliberately obfuscate.

- Creator impact: If audiences reduce AI exposure, algorithmic shifts could penalize legitimate, disclosed AI-assisted creative. Expect appeals and policy edge cases.

- Education vs. enforcement: The literacy fund raises awareness but doesn’t replace policy clarity, escalation paths, and penalties for deceptive synthetic media.

Competitive Angle

Compared with peers, TikTok’s slider is more granular than simple “show label” policies seen elsewhere, giving users an active dial rather than a passive tag. Meta has leaned into AI-only experiences, while TikTok is segmenting without forking the feed. On provenance, many platforms support C2PA; TikTok’s proprietary invisible watermark is a differentiator for internal reliability but less portable than open credentials. Expect pressure on rivals to match user controls and on TikTok to publish watermark performance data.

Recommendations

- Adopt C2PA by default: Embed Content Credentials in all AI-assisted assets. Where feasible, preserve credentials through editing and export to maximize downstream detection.

- Run A/B tests by audience: Compare AI vs. non‑AI treatments and monitor how the AIGC slider affects completion, shares, and conversion. Rebalance your content mix accordingly.

- Tighten disclosure and appeals: Update creator contracts and internal guidelines for AI use and labeling. Define an appeals path for mislabeling and track error rates.

- Harden brand safety: For sensitive campaigns, prefer human-shot or lightly assisted content until watermark and labeling accuracy are validated for your categories.

- Prepare comms for deepfakes: Establish a rapid response plan with evidence chains (original files, credentials) to rebut manipulated or impersonation content.

Bottom line: TikTok’s slider and watermarking raise the bar on user choice and provenance, but they won’t solve synthetic media risk alone. Treat them as new signals in your distribution and safety stack—and instrument your workflows to measure their real impact.