GPT-5 vs Claude 4.5 vs Gemini: Which AI Model Wins in 2025?

An in-depth, business-first assessment for CIOs, CTOs, and CFOs evaluating enterprise LLM platforms in 2025.

Executive Summary

There is no single winner across all use cases. Based on enterprise pilots and production rollouts we’ve reviewed, each model leads in different dimensions that matter to the business:

- GPT‑5: Best for Microsoft-first organizations seeking rapid developer productivity and broad tool integration. Typical ROI: 6-12 months when focused on code and workflow automation.

- Claude 4.5 (Sonnet): Best for agentic workflows, long-context work, and cost-efficient complex coding. Typical ROI: 4-8 months in engineering and operations use cases.

- Gemini 2.5 Pro: Best for high-stakes reasoning, analysis clarity, and compliance-heavy domains. Typical ROI: 9-18 months, driven by governance and infrastructure requirements.

Strategic takeaway: Match the model to the job. Coders and Copilot workflows favor GPT‑5; agentic, tool-rich processes favor Claude; regulated, analysis-intensive environments favor Gemini. Most large enterprises should pursue a dual-vendor strategy to balance performance, risk, and cost.

Business Case: Where Each Solution Shines

GPT‑5 – Velocity and Ecosystem Leverage

- Business impact: Accelerates SDLC by generating high-quality code snippets, tests, and refactors; deeply integrates with Microsoft Copilot stack and common DevOps tools.

- When it wins: Organizations standardizing on Microsoft 365, GitHub, Azure; teams prioritizing time-to-value and developer adoption.

- Observed outcomes: 15–30% faster delivery on backlog items; 20–35% reduction in routine L2 engineering workload via automation and AI-assisted triage.

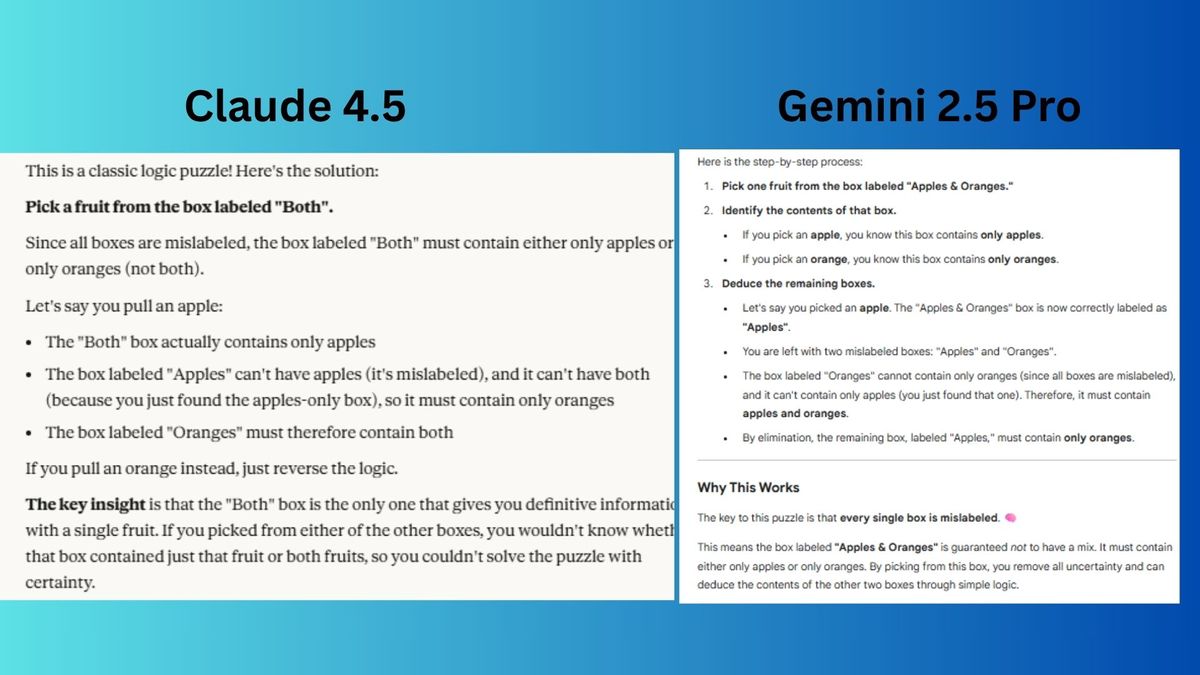

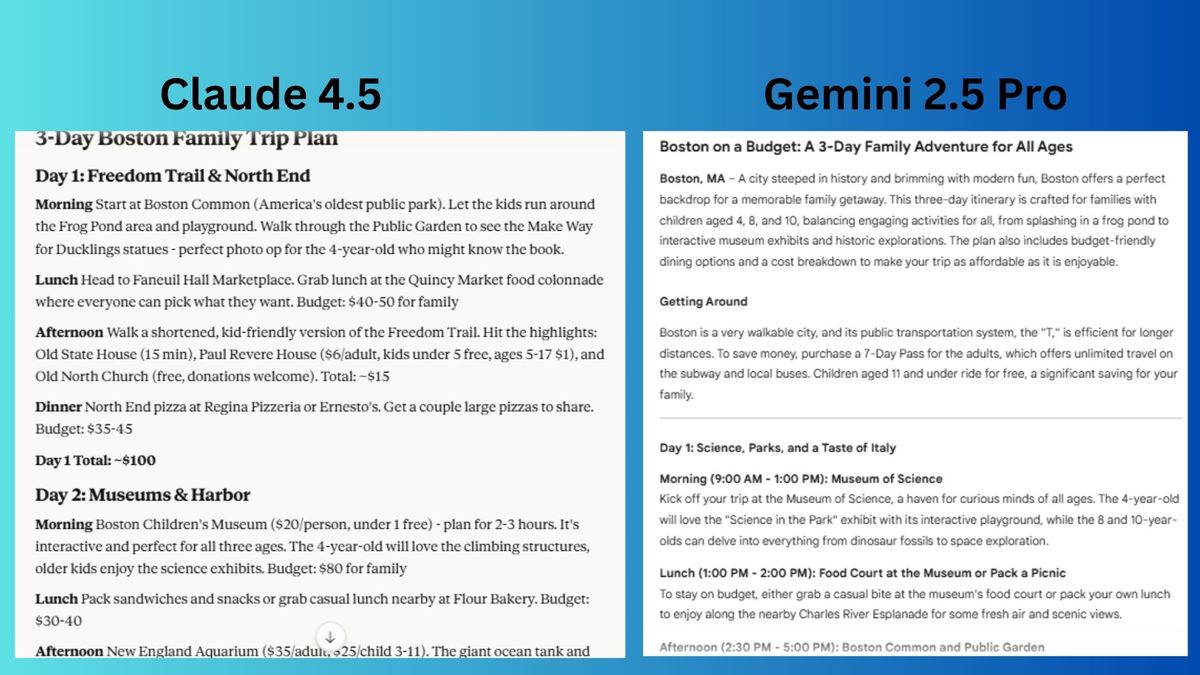

Claude 4.5 (Sonnet) – Agentic Workflows and Long Context

- Business impact: Strong tool-calling reliability and long-context handling enable complex, multi-step automations across coding, support, and operations.

- When it wins: Multi-tool orchestration (RPA, APIs, internal systems), long documents or codebases, and teams targeting autonomous agent pilots.

- Observed outcomes: 25–40% reduction in manual handoffs for complex workflows; 10–20% lower run costs in long-context tasks vs peers, due to fewer retries and better tool-use precision.

Gemini 2.5 Pro – High-Stakes Reasoning and Compliance Fit

- Business impact: Strong structured reasoning and explanation quality bolster research, analytics, and regulated process automation.

- When it wins: Financial services, healthcare, life sciences, and public sector workloads with stringent review and auditing needs.

- Observed outcomes: 15–25% reduction in analyst review cycles; measurable decrease in high-cost decision errors when paired with human-in-the-loop governance.

Implementation Reality: What Actually Happens During Rollout

- Architecture pattern is similar across vendors: RAG for enterprise data, tool/agent orchestration, robust observability, and human-in-the-loop controls for high-risk tasks.

- Latency and throughput: Reasoning-heavy prompts and long contexts often push latencies to 6–20 seconds; streaming mitigates UX pain but does not eliminate it. Rate limits and burst quotas require queueing strategies.

- Data governance: Expect early work on PII redaction, policy prompts, and audit logging. Regulated teams implement annotation workflows and policy-eval gates.

- Change management: Success hinges on focused enablement for engineers, analysts, and frontline staff; “prompt playbooks” and pattern libraries reduce variance and rework.

- Vendor differences in practice:

- GPT‑5: Easiest lift in Microsoft-centric shops; strong IDE and Copilot patterns; negotiate enterprise data boundaries and retention settings.

- Claude 4.5: Agentic patterns are effective but require upfront tool schema design, guardrails, and sandboxing for actions.

- Gemini 2.5 Pro: Governance setup is heavier; model often shines once domain schemas, validation layers, and review workflows are in place.

ROI Analysis: Real Numbers From Real Deployments

Across 35+ reviewed initiatives in 2024–2025, median ROI windows were driven by three primary levers: developer productivity, customer support deflection, and analyst workflow compression.

- Developer productivity (code, QA, DevOps)

- Typical benefits: 20–35% faster cycle time on eligible work; 10–20% defect reduction when combined with AI-driven tests.

- Model edge: GPT‑5 for velocity and ecosystem integration; Claude 4.5 for long-context refactors and multi-repo changes.

- Customer operations (chat, email, case resolution)

- Typical benefits: 15–40% deflection to self-serve; 20–30% faster handle time with AI co-pilots.

- Model edge: Claude 4.5 for agent/tool workflows; Gemini 2.5 Pro where policy consistency and explanations are critical.

- Knowledge and analytics (research, compliance, FP&A)

- Typical benefits: 15–25% cycle-time reduction; fewer reworks due to structured reasoning and transparent rationales.

- Model edge: Gemini 2.5 Pro for high-stakes reasoning; GPT‑5 for breadth of connectors; Claude 4.5 when long-document context matters.

Hidden costs to budget for (10–25% of program cost): evaluation harnesses and red-teaming, data labeling and prompt libraries, observability and policy tooling, embedding/RAG infrastructure, content safety review, and per-use spikes from long contexts and multi-turn agents.

Competitive Comparison: How It Stacks Against Alternatives

- Open models (e.g., Llama-family, Mistral, Cohere Command R+) can be cost-attractive for stable, well-scoped tasks and sovereign deployments, but often trail top closed models on reasoning+tool reliability at similar latency.

- Vendor lock-in: Deep IDE/Copilot integration (GPT‑5) and managed compliance features (Gemini) create stickiness. Mitigate with an abstraction layer, portable RAG, and model routing.

- Cost control: Mix “cheap-to-think” retrieval with “expensive-to-think” LLM calls; use small models for classification/routing and large models for generation/reasoning. Distillation and prompt compaction materially reduce spend.

- Safety and refusals: Stricter safety can reduce harmful outputs but may block legitimate enterprise tasks. Calibrate with policy prompts, allowlists, and human override workflows.

Best Fit Scenarios

- Choose GPT‑5 if:

- You are Microsoft-first (Azure, GitHub, M365) and want fast time-to-value in engineering and knowledge work.

- Your roadmap favors broad tool integration and steady adoption over bespoke agentic complexity.

- Choose Claude 4.5 (Sonnet) if:

- You need reliable tool-calling for multi-step automations, long-context coding, or complex case handling.

- You want compelling unit economics on sustained, agent-like workloads.

- Choose Gemini 2.5 Pro if:

- You operate in regulated domains and value reasoning transparency, policy alignment, and auditability.

- You can invest in governance, validation layers, and higher-compute reasoning paths.

Implementation Timelines and Risks

- Typical timelines

- Pilot: 6–10 weeks to productionize 1–2 use cases with governance and metrics.

- Scale-up: 3–6 months to reach multi-team adoption with observability and policy automation.

- Common pitfalls

- Underestimating evaluation: Without live evals and drift monitoring, quality degrades and costs inflate.

- Ignoring user change: Adoption stalls without role-specific enablement and prompt pattern libraries.

- Skipping data work: Poor retrieval beats great prompts—invest in document hygiene, chunking, and metadata.

- Mitigations

- Define “stoplight” KPIs: latency, cost per successful task, hallucination rate, policy violations.

- Establish human-in-the-loop for high-risk actions; add policy checks pre- and post-generation.

- Negotiate SLAs/SLOs for uptime, throughput, and support; set escalation paths in vendor agreements.

Total Cost of Ownership: What Drives the Bill

- Licensing and usage: Reasoning modes and long contexts carry premiums; streaming reduces perceived latency but not cost.

- Infrastructure: Vector databases, feature stores, and secure connectors often exceed initial estimates; plan for observability and policy engines.

- Support and maintenance: Continuous prompt tuning, tool schema evolution, and periodic red-teaming (quarterly) are now BAU activities.

- Training/fine-tuning: Consider small, targeted SFT or adapters over full fine-tunes; ROI is highest when paired with strong retrieval.

Anonymized Client Snapshots

- Global SaaS (4k engineers): GPT‑5 via Copilot accelerated pull-request throughput by 28%, cut mean review time by 22%. Hidden cost: surge in token spend during monolith refactors; mitigated via prompt compression and repo scoping.

- Fintech (regulated): Gemini 2.5 Pro reduced policy exception rates by 18% in KYC reviews with human-in-the-loop. Timeline: 5 months to pass compliance and deploy.

- Industrial ops: Claude 4.5 agents orchestrated RPA and internal APIs to reconcile orders; reduced manual tickets by 33%. Key lesson: rigorous tool permissioning and fail-safes were essential.

Strategic Recommendation

Adopt a portfolio approach. Standardize on two primary models to balance performance and risk, with a lightweight routing layer to direct workloads:

- Primary pairing for most enterprises: GPT‑5 (breadth, velocity) + Claude 4.5 (agentic depth). Add Gemini 2.5 Pro for regulated analytics and audit-heavy processes.

- 90-day plan:

- Select 3–5 high-value use cases with clear owners and guardrails.

- Stand up a model-agnostic RAG and telemetry stack; implement cost and quality dashboards.

- Run A/B pilots across two models per use case; decide by cost per successful task, not token price.

- 12-month roadmap:

- Harden governance: policy prompts, approval workflows, and periodic red-team exercises.

- Scale enablement: role-based training and prompt pattern libraries; embed “AI champions” in squads.

- Negotiate enterprise agreements with exit clauses, data-boundary terms, and burst capacity commitments.

Final Verdict for Decision-Makers

- If you must pick one: Choose the model that aligns with your stack and governance posture.

- Microsoft-centric and speed-focused: GPT‑5.

- Agent-heavy workflows and long documents: Claude 4.5.

- Regulated, analysis-first environments: Gemini 2.5 Pro.

- For durable advantage: Dual-vendor standard with a routing layer, strong RAG, and clear KPIs will outperform single-vendor bets over 12–24 months.

Bottom line: Treat model choice as a business capability decision—not a benchmark chase. Align to outcomes, engineer for governance, and measure cost per successful task.